HPC @ University of Bern: ubelix – Uni BErn LInuX cluster

See also the original slides of Andres Aeschlimann as pdf »

Purpose

This Grid HPC infrastructure is primarily designed to support the researchers at the Campus. They should use their time doing research and not be bothered by deploying a Grid HPC infrastructure.

Some facts

- first Linux Cluster was installed in 2001 (1 master and 32 single core nodes)

- continuously expanded to ~1000 cores in >200 nodes today

- Dual- and quadcore worker nodes

- Mostly Opterons, increasing # of Intels (Nehalem)

- several suppliers (mostly SUN, but currently also IBM and some Dell)

- < 100kW

- Gentoo Linux www.gentoo.org

- Kernel 2.6.22/2.6.27

- 2TB memory, 50TB disk

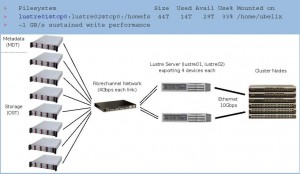

- Lustre filesystem: 1.8.1

- Sun Grid Engine 6.2

- Gb Switch

- Currently no Infiniband Switch

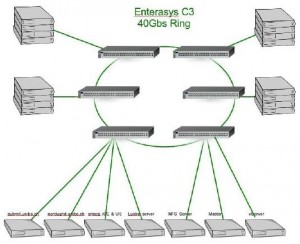

Internal (private) network

- TCP/IP

- Stackable Switches (~40Gbs)

- „normal“ Gigabit Ethernet on the worker nodes

- 10GE Ethernet for high throughput servers

Lustre@ubelix

Application portfolio (local users)

- HE Physics

- Astronomy

- Computational and Molecular Population Genetics Lab

- Space Research Physics

- Computer Vision and Artificial Intelligence

- Chemistry and Biochemistry

- …

Applications from remote (SMSCG)

- ATLAS: high energy physics application developed for the LHC experiment at CERN

- RSA768: cryptographic application

- NAMD and GROMACS: biochemistry applications

- GAMESS: biochemistry application (work in progress)

- …

Other clusters @ UniBE

- The LHEP UNIBE Atlas T3 2009 – A ROCKS Cluster with ~200 cores (Sun Fire X2200 IU dual quad cores) and ~50 TB on CentOS. Located in same room as ID UNIBE clusgter. Mainly serves local and remote ATLAS scientists. Backfilled with remote users and applications. Speciality: Access only via ARC clients, i.e. remote and local users habe the same interface. http://ce.lhep.unibe.ch

- Theoretical Physics (~200 cores, with interconnect)

- Climate Physics (~100 cores)

- Space Physics (~100 cores)

- Chemistry (~100 cores, with interconnect)

- Computational and Molecular Population Genetics (~60 cores)