Slidecasts of Introduction to OpenACC 2.0 and CUDA 5.5 Course

CSCS organized in December in Lugano a 3-day training course on GPU programming.

Experts from Cray and NVIDIA, together with CSCS staff teached participants the basics of programming in the latest versions of OpenACC and CUDA, together with tools for code debugging and parallel performance analysis.

Agenda

OpenACC

- OpenACC introduction and roadmap

- Programming exercises using the Cray OpenACC Compiler

- Code development, debugging and performance analysis tools (OpenACC)

Cuda

- CUDA introduction and roadmap

- New and improved features in CUDA 6

- CUDA 5.5 programming exercises

- Code development, debugging and performance analysis tools (CUDA)

- CUDA and OpenACC interoperability

3rd party Tools

- DDT (debugging)

- Vampir (performance analysis)

Slidecasts

Introduction to CSCS and the Course; Themis Athanassiadou (CSCS) »

An introduction to OpenACC by Alistair Hart (Cray)

- Part 1 » This lecture provides a first introduction to OpenACC, showing the first steps in accelerating a loop-based code using the “parallel loop” and “data” directives. Variable shared and private scoping is introduced. I also give a brief overview of the architecture of the Cray XC30 and of the Nvidia Kepler K20x GPU, to help developers understand whether their code will perform well on such architectures.

- Part 2 » I demonstrate step-by-step how to successfully accelerate a simple application (the Himeno solver) using only the “parallel loop” and “data” directives. I also show how to get information on scheduling and performance using: the Cray compiler loopmark; the Cray OpenACC runtime debugging; the Nvidia Compute Profiler; and the CrayPAT performance analysis tool.

- Part 3 » I first describe some extra OpenACC features (the “update” directive, array sectioning and “present” clauses). I also discuss race conditions and how to avoid them, as well as a few other “gotchas” that can lead to incorrect results. I then cover performance tuning using the “gang”, “worker” and “vector” clauses as well as “collapse” and “cache”. I’ve got a few examples to show how much performance we might gain through tuning, first with simple kernels and then with the Himeno benchmark. Finally, I discuss asynchronicity and how to handle streams of tasks and dependency trees with OpenACC.

- Part 4 » In this lecture, I describe how to port a larger code to run on a GPU using OpenACC. This is done using the example of the NPB MG code. I show how to use loop-level profiling with the Cray compiler and CrayPAT tool to understand application structure and identify suitable accelerator kernels. I then port the entire code step-by-step, showing performance data and profiles for each step. I show how to identify and avoid common performance bottlenecks.

- Part 5 » I briefly discuss the remainder of the OpenACC programming model, including unstructured data regions, the runtime API and the “host_data” interoperability directive. I show how to use this directive to call a CUDA kernel from OpenACC.

- Part 6 » In this talk, I discuss the extra complications in porting a parallel, message-passing code to use OpenACC, using the example of the parallel Himeno code. In particular, I show how asynchronicity and dependency trees can be used to give best overlap of computation and communication. I also show how best to combine MPI single-sided communication with OpenACC asynchronicity, including when “G2G” MPI is called with GPU-resident buffers. The lecture concludes with a brief discussion of OpenACC features planned for future versions of the standard, and a comparison of OpenACC with the new OpenMP accelerator directives.

CUDA by Peter Messmer (NVIDIA)

- Part A: GPU Architecture Overview and CUDA Basics »

- Part B: New Features in CUDA 5 on Kepler »

- Part C: The CUDA Toolkit: Libraries, Profilers, Debuggers »

- Part D: GPU Optimization Part 2 »

- Part E: CUDA 6 »

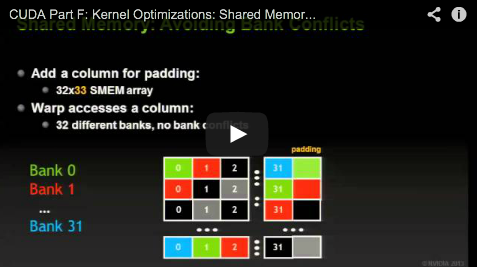

- Part F: Kernel Optimizations: Shared Memory Accesses »

Getting the best out of Hybrid Performance Tools; Jean-Guillaume Piccinali (CSCS) »

Getting the best out of Hybrid Debugging Tools; Jean-Guillaume Piccinali (CSCS) »